Managing Usage and License Details

Prerequisites

- User configured in snowflake default profile should have access to PUBLIC database and SNOWFLAKE database.

- Provide access privileges for Public database defined under and for Snowflake Account Usage.

Usage and License Details

This section contains the following information:

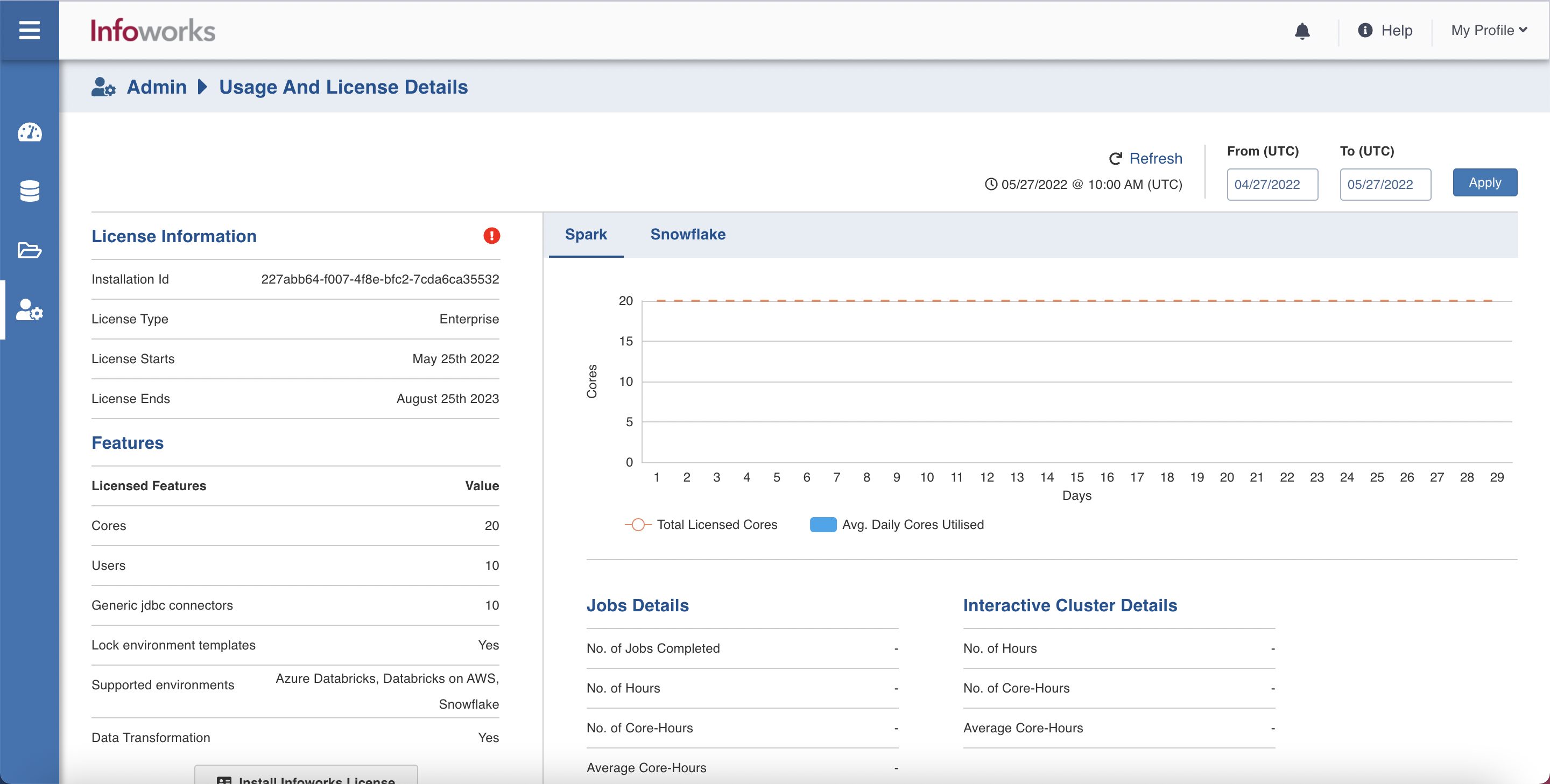

License Information: This table provides details such as, Installation Id, License Type, License Starts, and License Ends.

License Features: This table provides details such as, Cores, No. of users, Supported Environment Types, Source Connectors licensed for this instance, etc.

To view the usage details, perform the following steps:

- In the Admin page, click Usage and License Details.

- Enter the start date as Coordinated Universal Time (UTC), in the From (UTC) field.

- Enter the end date as Coordinated Universal Time (UTC), in the To (UTC) field.

- Click Apply.

Usage Details feature is supported for all the platforms, that is, Azure Databricks, AWS Databricks, GCP Dataproc, Databricks on GCP, Amazon EMR, and Snowflake Environments.

Users can now avail usage details such as number of jobs completed, number of hours, number of core hours, and so on from the Usage and License Details section of the Infoworks Admin page.

Usage and License Details section displays the License Information and Features supported by the logged in Infoworks instance.

Install New Infoworks License button allows you to update the license key of the Infoworks instance.

The usage details of jobs and Interactive clusters are also displayed on the page. Here, the jobs refer to Infoworks jobs running on Databricks. Interactive cluster refer to the long running cluster which is used for providing the sample output while creating transformation pipelines through collaborative interactive analysis.

The right side panel of the billing dashboard features two tabs: Spark and Snowflake

The graph under the Spark tab, represents the following:

- Total Licensed Cores: The total number of cores licensed for your Infoworks instance. This value is displayed under the Features section on the same page.

- Avg. Daily Cores Utilised: The sum of Core Hours of all the Infoworks jobs divided by 24.

Following are the descriptions for the job details displayed under the Jobs Details section:

- No. of Jobs Completed : Total number of jobs completed from the selected start date beginning at 00:00:00 hours, and ending at 00:00:00 hours of the selected end date. The calculation ends at 00:00:00 hours of the end date. Hence, the selected end date is excluded from calculation of usage details.

- No. of Hours: Summation of total time taken by each Infoworks job.

- No. of Core-Hours: Summation of core hours of each Infoworks job. Core hour = CPU time in hours derived from the spark executors of each Infoworks job.

Following are the descriptions for the interactive cluster details displayed under the Interactive Cluster Details section:

- No. of Hours: Total time the interactive cluster was running in the selected duration.

- No. of Core-Hours: Total core hours consumed by the interactive cluster. Core hour = Number of cores used for the interactive cluster multiplied by the time taken by it in hours.

The graph under the Snowflake tab, represents the following:

Average Daily Snowflake Credits Utilised: It is calculated as follows ((Time taken to execute all Infoworks queries on all data warehouses on a particular day) * (Time taken to execute all queries on all data warehouse on that day)) / (Total number of credits)

For the current day, the billing is refreshed every thirty minutes and updated in the dashboard.

Snowflake Compute Details section displays the following information:

Snowflake Compute Credits:Snowflake Compute Credits is the metric used for billing. It is calculated using the formula (time to execute IW queries within a given time period X) / (total time to execute all queries in that WH within that same time period X)) * (total Virtual Warehouse and Cloud Service credits consumed in that WH during time period X)

Total Snowflake Compute Credits:The total number of Snowflake Compute Credits used in the span of days selected, shown in the graph.

Average Daily Snowflake Compute:The average number of Snowflake Compute Credits used in the span of days selected.