External Dependency

External dependencies are specific files that are not a part of the Infoworks package but are required for the smooth functioning of the setup and are unique depending upon the infrastructure setup of the organization. In order to incorporate these dependency files with the Infoworks setup, they have to be placed in a directory structure accessible to the Infoworks environment setup.

Assuming that the IW_HOME variable is set to /opt/infoworks.

The below mentioned default paths should be created and used (respectively) to push all of the external dependencies.

- /opt/infoworks/uploads/lib/external-dependencies/common/spark_2x_211/cosmos_db

- /opt/infoworks/uploads/lib/external-dependencies/common/spark_2x_211/teradata

- /opt/infoworks/uploads/lib/external-dependencies/common/spark_2x_212/cosmos_db

- /opt/infoworks/uploads/lib/external-dependencies/common/spark_2x_212/teradata

- /opt/infoworks/uploads/lib/external-dependencies/common/spark_3x_212/cosmos_db

- /opt/infoworks/uploads/lib/external-dependencies/common/spark_3x_212/teradata

So, the external dependency files or jars which are required as per the specific requirements should be pushed to all of the aforementioned directories.

To copy any file from your local system to a specific location inside the Kubenetes pods:

Step 1: Run the below command to create all the mentioned directories in your local file system.

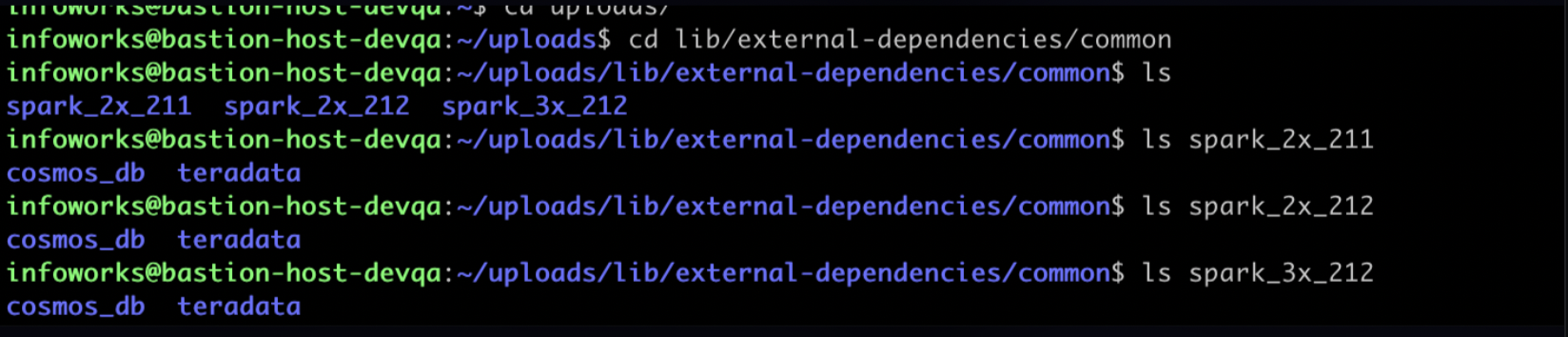

xxxxxxxxxxmkdir -p ${IW_HOME}/uploads/lib/external-dependencies/common/{spark_2x_211,spark_2x_212,spark_3x_212}/{cosmos_db,teradata}Step 2 (Optional): To validate the directory structure, run the below commands.

xxxxxxxxxxcd lib/external-dependencies/commonlsls spark_2x_211ls spark_2x_212ls spark_3x_212Output:

Step 3: Push all the required dependencies and jar files to all the directories.

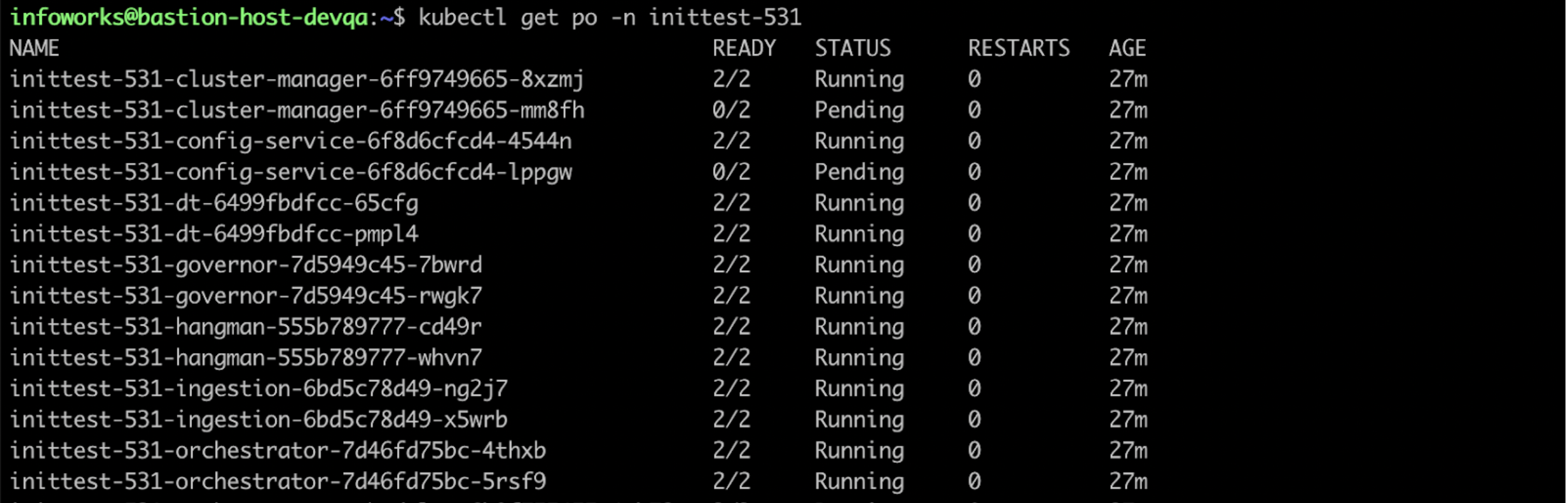

Step 4: Once all the dependencies are in place, switch to ${IW_HOME} directory, and run the below command to get the list of the pods and select the ingestion pod.

xxxxxxxxxxkubectl get pods -n <namespace>Output:

Step 5: Copy the jars to the ingestion pod using the below command.

xxxxxxxxxxkubectl cp lib/ <your_namespace>/<specific-pod>:/opt/infoworks/uploads -c <container_name>xxxxxxxxxxkubectl cp lib/ inittest-531/inittest-531-ingestion-958498c8c-46l82:/opt/infoworks/uploads -c ingestionValidation

To validate if all the required files are copied into the ingestion pod, perform the following steps:

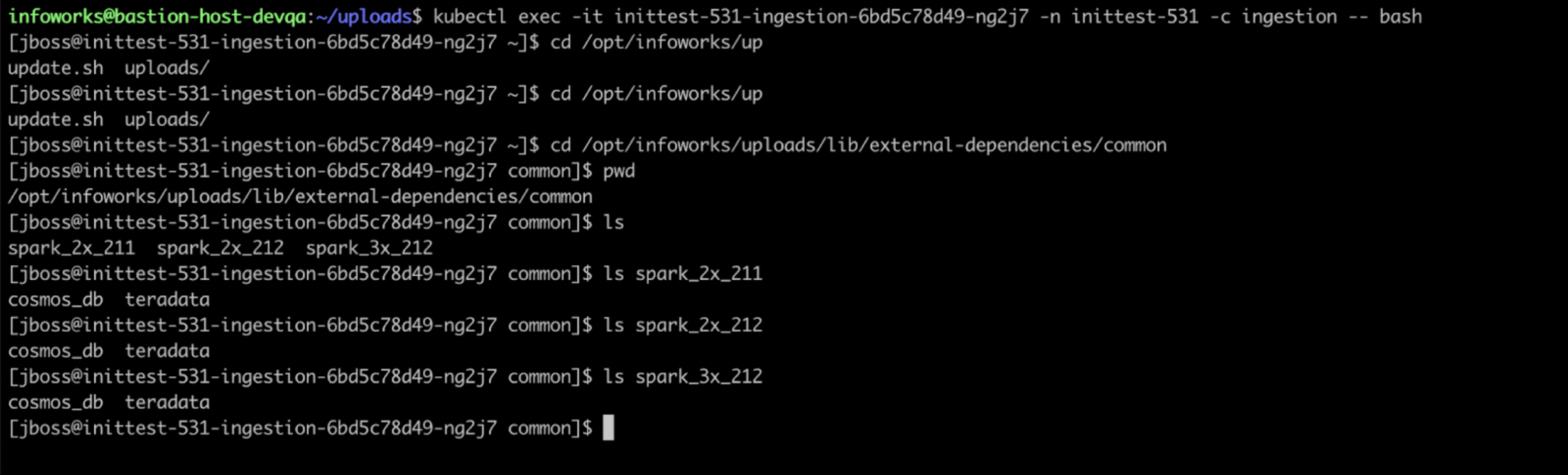

Step 1: Enter the container of the pod into which you have pushed the files.

xxxxxxxxxxkubectl exec -it <pod_name> -n <namespace> -c <container_name> -- bashxxxxxxxxxxkubectl exec -it inittest-531-ingestion-958498c8c-46l82 -n inittest-531 -c ingestion -- bashStep 2: Navigate to the directory: cd /opt/infoworks/uploads/lib/external-dependecies/common.

In this path you should be able to see lib/external-dependencies/common/{spark_2x_211,spark_2x_212,spark_3x_212}/{cosmos_db,teradata}

directory structure which will have all your dependencies.