v5.4.0.5

Date of Release: April 2023

Resolved Issues

| JIRA ID | Issue |

|---|---|

| IPD-21604 | User is unable to use Snowflake secondary roles in pipelines. |

| IPD-21703 | The jobs are intermittently failing due to Service Account Authorization Token expiry. |

| IPD-21277 | During BTEQ conversion, join condition contains invalid column references resulting in validation error. |

| IPD-21280 | The SQL Import adds the IN and UNION condition in the where clause which throws a validation error. |

| IPD-21475 | During BTEQ conversion, pipelines are creating additional target tables before the union node. |

| IPD-21613 | The SQL import fails with the null pointer exception for the aggregate node. |

| IPD-21618 | The job_object.json file from Infoworks ingestion/pipeline job logs contains Databricks token in clear text. |

| IPD-21729 | Password is being shown in the logs when Snowflake source is being used. |

| IPD-21186 | During BTEQ conversion, the "insert into..." query is getting created in overwrite mode for the existing table. |

| IPD-21275 | The NOT IN condition is getting added in join node. |

| IPD-21309 | If an SQL query has multiple CASE statements without column alias, it is creating only one derivation instead of appropriate number of column ports. |

| IPD-21407 | While configuring SFTP, if user selects "Using Private Key" as the Authentication Mechanism type and selects Private Key option button, it results in internal server error afterwards. |

| IPD-21420 | The feature to retrieve private key for SFTP source from Key vault Secret store is not available. |

| IPD-21427 | Pipeline build is adding null columns in "insert into..." query while using the reference table. |

| IPD-21441 | There is no configuration to automatically deselect all the ziw columns on the target node. |

| IPD-21450 | Operations Dashboard is not displaying Speedometer charts at the top of the page. |

| IPD-21451 | Pipeline Preview Data is failing as it is unable to connect to Databricks Cluster. |

| IPD-21510 | No refresh tokens are generated for users created via SAML or LDAP authentication flow. |

| IPD-21503 | Operations Dashboard displays different count on speedometer chart and the actual list of jobs. |

| IPD-20984 | When user defined tables are selected for ingestion target, Infoworks attempts to create error tables in the target schema instead of the staging schema. |

| IPD-21178 | The sub-queries are not supported in IN/NOT-IN node during SQL Import. |

| IPD-21201 | The SQL import fails with duplicate ziw_row_id column while running the BTEQ converter. |

| IPD-21266 | The Pipeline lineage API does not return the source schema and source table name for pipelines built in Snowflake environment. |

| IPD-21319 | Some of the upstream pipeline columns are not getting projected to the target table during table update resulting in pipeline failure during runtime. |

| IPD-21260 | The column name is not extracted properly when the column name has schema_name.table_name value. |

| IPD-21272 | The Domain Summary page hangs when there are more than 100 pipelines in the domain. |

| IPD-21383 | Infoworks is overwriting spark driver and executor javaoptions resulting in users unable to add custom java options |

| IPD-21247 | The pipeline build fails when Derive node (previous to the target node) is not part of the Pipeline configured with Update mode. |

| IPD-21299 | The Staging Schema Name field is not visible in the source setup page for Kafka sources on Snowflake. |

Upgrade

Prerequisites

- Ensure the current deployment’s chart is present in /opt/infoworks.

- Python 3.8 or later version with the pip module is installed in the Bastion VM.

- Stable internet connectivity on the Bastion VM to download the required python packages from the python repository during installation/upgrades.

- Validate that the version of the old chart is 5.4.0.

xxxxxxxxxxcat /opt/infoworks/iw-k8s-installer/infoworks/Chart.yaml | grep appVersionxxxxxxxxxxappVersion: 5.4.0Ensure to take backup of MongoDB Atlas and PostgresDB PaaS. In case you don't take the backup, jobs will fail after the rollback operation. For more information, refer to the MongoDB Backup and PostgresDB PaaS Backup |

Upgrade Instructions

To upgrade Infoworks on Kubernetes:

It is assumed that the existing chart is placed in the /opt/infoworks directory and the user has the access permission. |

xxxxxxxxxxexport IW_HOME="/opt/infoworks"Before selecting the type of upgrade execute the following commands.

Step 1: Create the required directories and change the path to that directory.

xxxxxxxxxxmkdir -p $IW_HOME/downloadscd $IW_HOME/downloadsInternet-free Upgrade

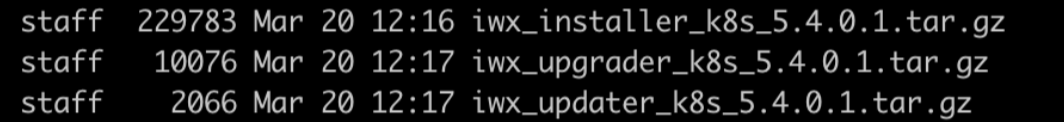

Step 1: Download the upgrade tar files shared by the Infoworks team to the Bastion (Jump host) VM and place it under $IW_HOME/downloads.

Step 2: To configure Internet-free upgrade, execute the following command:

xxxxxxxxxxexport INTERNET_FREE=trueInternet-based Upgrade

Step 1: Download the Update script tar file.

xxxxxxxxxxwget https://iw-saas-setup.s3-us-west-2.amazonaws.com/5.4/iwx_updater_k8s_5.4.0.5.tar.gzCommon Steps for Both Internet-free and Internet-based

Step 1: Extract the iwx_updater _k8s_5.4.0.5 tar.gz under $IW_HOME/downloads.

Do not extract the tar file to /opt/infoworks/iw-k8s-installer as it would result in loss of data. |

xxxxxxxxxxtar xzvf iwx_updater_k8s_5.4.0.5.tar.gzThis should create two new files as follows - update-k8s.sh and configure.sh.

Step 2: Run the script.

xxxxxxxxxx./update-k8s.sh -v 5.4.0.5helm upgrade 531 /opt/infoworks/iw-k8s-installer/infoworks -n aks-upgrade-540 -f /opt/infoworks/iw-k8s-installer/infoworks/values.yaml Enter N to skip running the above command to upgrade the helm deployment. (timeout: 30 seconds): NUpgrade successStep 3 (Applicable only for Databricks Persistent Clusters): A change in the Infoworks jar requires libraries being uninstalled and cluster restart. Without this step, there will be stale jars. Perform the following steps:

(i) Go to the Databricks workspace, navigate to the Compute page, and select the cluster that has stale jars.

(ii) In the Libraries tab, select all the Infoworks jars and click Uninstall.

(iii) From Infoworks UI or Databricks dashboard, select Restart Cluster.

Rollback

Prerequisites

- Before executing the rollback script, ensure that IW_HOME variable is set.

- Assuming Infoworks home directory is /opt/infoworks, run the below command to set the IW_HOME variable.

- Validate that the version of the old chart is 5.4.0.5

xxxxxxxxxxexport IW_HOME=/opt/infoworks- Ensure the current deployment’s chart is present in /opt/infoworks.

- Execute the below command to check the appVersion.

xxxxxxxxxxcat $IW_HOME/iw-k8s-installer/infoworks/Chart.yaml | grep appVersionxxxxxxxxxxappVersion: 5.4.0.5Ensure to restore MongoDB Atlas and PostgresDB PaaS. In case you don't take the backup, jobs will fail after the restore operation. For more information, refer to the MongoDB Restore and PostgresDB Restore. |

Rollback Instructions

Step 1: Download the rollback script.

xxxxxxxxxxwget https://iw-saas-setup.s3.us-west-2.amazonaws.com/5.4/rollback-k8s.shStep 2: Place the Update script in the same directory as that of the existing iw-k8s-installer.

Step 3: Ensure you have permission to the $IW_HOME directory.

Step 4: Give executable permission to the rollback script using the below command.

xxxxxxxxxxchmod +x rollback-k8s.shStep 5: Run the script.

xxxxxxxxxx./rollback-k8s.sh -v 5.4.0Step 6: You will receive the following prompt, "Enter N to skip running the above command to upgrade the helm deployment. (timeout: 30 seconds): ", type Y and press Enter.

xxxxxxxxxxEnter N to skip running the above command to upgrade the helm deployment. (timeout: 30 seconds): Y